Spark Authentication

Spark has an internal mechanism that authenticates executors with the driver controlling a given application. This mechanism is enabled using the Cloudera Manager Admin Console, as detailed in Enabling Spark Authentication.

When Spark on YARN is running on a secure cluster, users must authenticate to Kerberos before submitting jobs, as detailed in Running Spark Applications on Secure Clusters.

Enabling Spark Authentication

Minimum Required Role: Security Administrator (also provided by Full Administrator)

Spark has an internal mechanism that authenticates executors with the driver controlling a given application. This mechanism is enabled using the Cloudera Manager Admin Console, as detailed below. Cluster administrators can enable the spark.authenticate mechanism to authenticate the various processes that support a Spark application.

- Log into the Cloudera Manager Admin Console.

- Select (or ).

- Click the Configuration menu.

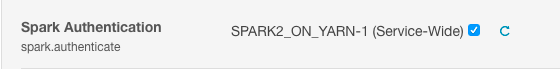

- Scroll down to the Spark Authentication setting, or search for spark.authenticate to find it.

- In the Spark Authentication setting, click the checkbox next to the Spark (Service-Wide) property to activate the setting.

- Click Save Changes.

Note: If your cluster supports Spark 2, you must make the same change to the Spark 2 setting. For

example:

Note: If your cluster supports Spark 2, you must make the same change to the Spark 2 setting. For

example:

- Restart YARN:

- Select .

- Select Restart from the Actions drop-down selector.

- Re-deploy the client configurations:

- Select

- Select Deploy Client Configurations from the Actions drop-down selector.

Running Spark Applications on Secure Clusters

Secure clusters are clusters that use Kerberos for authentication. For secure clusters, Spark History Server automatically uses Kerberos, so there's nothing to configure.

Users running Spark applications must first authenticate to Kerberos, using kinit, as follows:

$ kinit

ldap@EXAMPLE-REALM.COM:'s password:

After authenticating to Kerberos, users can submit their applications using spark-submit as usual, as shown below. This command submits one of the default Spark sample jobs using an environment variable as part of the path, so modify as needed for your own use:

$ spark-submit --class org.apache.spark.examples.SparkPi --master yarn \ --deploy-mode cluster $SPARK_HOME/lib/spark-examples.jar 10

| << Using Kerberos with Solr | ©2016 Cloudera, Inc. All rights reserved | Configuring Spark on YARN for Long-Running Applications >> |

| Terms and Conditions Privacy Policy |